Metaverse is at the intersection of different emerging technologies and innovation, ranging from edge computing to Web3 economy. AI is undoubtedly one of them. This article focuses on how the current evolvement of AIGC can have great implications for the future metaverse, for both applications and the design process.

Snapshot of AIGC

The recent popularity of ChatGPT, an advanced chatbot launched in November 2022, has put the spotlight on the potential of artificial intelligence-generated content (AIGC). In the metaverse field, other forms of AIGC generators exist beyond text-to-text generators. Users can now generate 3D models, animations, and images based on multiple input forms.

Gaming

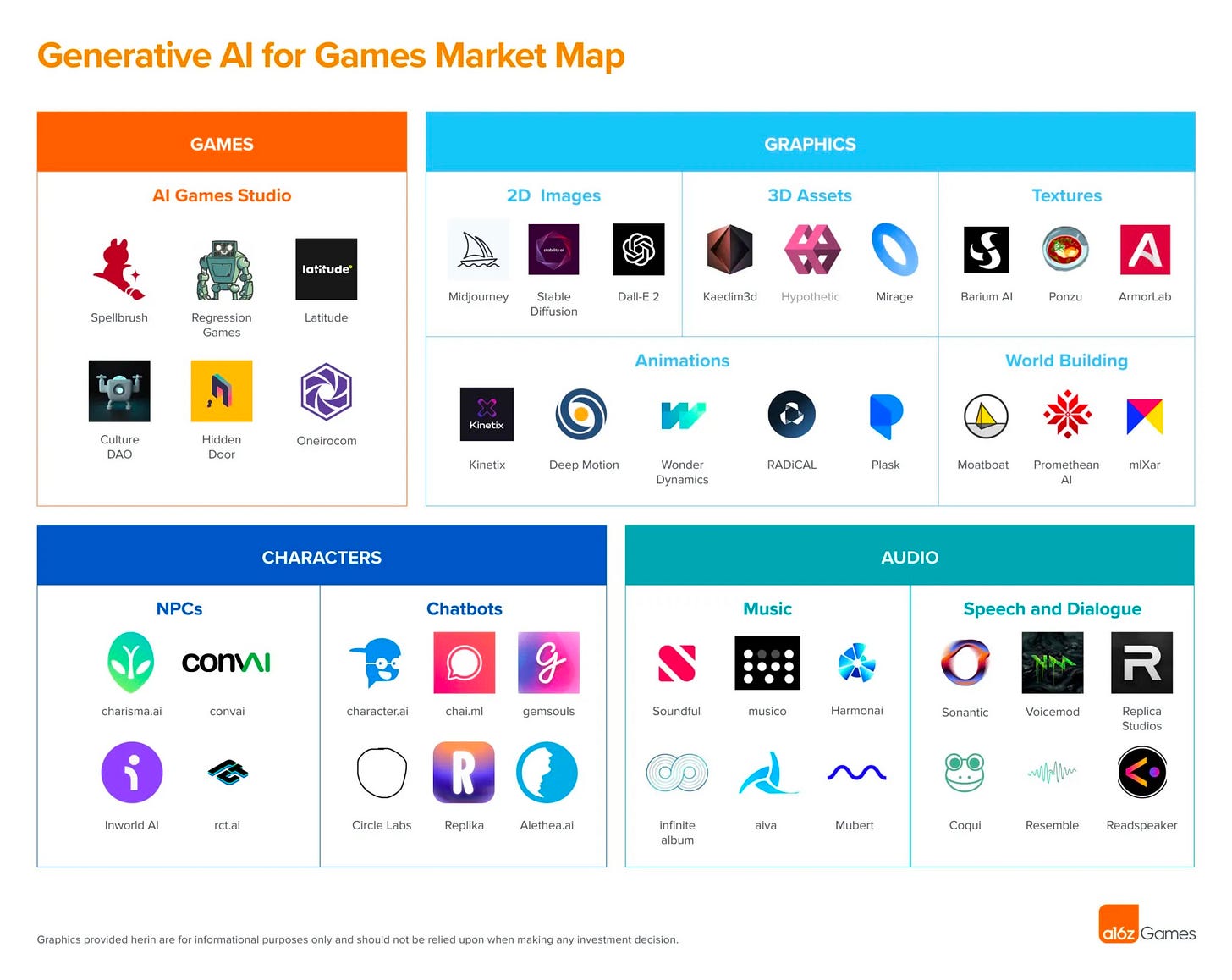

These AI tools, which were mostly launched in less than a year, have already affected how gaming studios’ design processes (AIGC can empower every gaming asset) and team formation (labor costs going down & designers saving more time). As gaming remains the driving force of the metaverse, the application of AIGC across the games market implies a deep impact on the metaverse going forward.

Avatar

In the movie “Free Guy”, the NPCs in an open-world game that used to be programmed in fixed behavior patterns come to life after developing their own consciousness, leading to twists and turns. The episode may not be that far from reality as companies like EA's Search for Extraordinary Experiences Division (SEED) have launched an AI agent capable of teaching itself from scratch to play Battlefield I.

"In future titles, as deep learning technology matures, I expect self-learning agents to be part of the games themselves, as truly intelligent NPCs that can master a range of tasks, and that adapt and evolve over time as they accumulate experience from engaging with human players.” — SEED technical director

Currently, there are no self-learning avatar products launched to the market, but startups like Soul Machines and big companies (NVIDIA, IBM, Meta..etc) are working in this field to create more adaptive virtual avatars in the metaverse, which can be used in industries such as technology (sales, customer service), finance (bank account, financial planning, admin), consumer (brand ambassadors, marketing), entertainment (intelligent NPC), healthcare, and education (tutor).

These self-learning avatars aim for adapting behaviors based on user interaction over time with features highly emphasizing personalization, as they create customized appearances and personalized recommendations.

Storyline

AIGC can achieve procedural generation where the different user sees dynamic episodes and hence experiences them uniquely, increasing the randomness in games. As AI factors in user data/ behavior, the storyline can also change adaptively such as through customized avatars, plots, and environments. Gaming platform like Roblox is embracing generative AI in their platform, expecting AIGC to lower the entry barrier for users to become creators and bring more people from AI communities to use its platform. As Unity has stated in their 2023 gaming report, the increased speed and reduced time of smaller gaming studios may be largely due to generative AI, showing emerging trends for more studios embracing the new trend.

Environmental Design

The steps of conducting environmental design can be broken down into planning, conceptualization, modeling, texturing, lighting, animation, testing, and optimization. In each step, AI can be helpful in data collection, personalization, and simulation. Companies like World Creator offer AI-powered tools for generating highly detailed virtual environments such as procedural terrain generation, advanced texturing tools, and dynamic lighting effects. Autodesk tackles challenges around “geometry understanding” using AI — to help contextualize the geometric world in real life.

“It’s about how we can understand the geometry of the world around us – not just of objects, but of space, Autodesk’s AI efforts will “absolutely” be important as the metaverse evolves.”

“For example, how is a space organized? What are the things in it? How can we break it down into geometry and, then, what are its functions – because a computer does not know that.” — Tonya Custis, Director of artificial intelligence research at Autodesk, Venture Beat interview

Implications for metaverse (our insights!)

In sum, below are the main implications of metaverse going forward in our view

Personalization: reflecting not only on the settings/design of metaverse applications but also avatar engagement, affecting real-world industries’ use cases

Decrease time/labor costs: Manual work time getting highly decreased. We can see gaming studios/ metaverse development companies decreasing team size.

New talents: Emphasis on talents with high creativity and the ability to work with AI have reached an all-time high as AI changes the competitive landscape of companies and frees out a budget for these talents.

More applications layer vs. general-purpose model AI: As general-purpose models like OpenAI's GPT-3 have a high barrier of entries, which already enjoyed an influx of capital from VCs and tech companies, startups going forward may target more on the application layer that is specifically designed for a particular task or domain, same for the context of metaverse/gaming.

Big companies have an unfair advantage in integrating AI with metaverse, but startups can benefit from using these tools to enhance efficiency. To follow the latest progress of the topic AIGC & metaverse, following these big companies’ latest development (esp. NVIDIA which has integrated AI from chip design to avatar movement) is the most efficient way.

Echoing the theme of this article and following the changing tide, this article has referred to ChatGPT in collecting information and finding case studies.